Setting Up An Environment

In this tutorial, we will learn how to set up a Gym-compatible environment interface to control the Franka Emika Panda robot. This can be changed for multiple robots/different robots/more objects and sensors as well.

Prerequisites

- A Franka Emika Panda robot is present and correctly configured in the environment.

- An additional object (e.g., a ball) that is actively publishing its ground-truth state to an observation channel.

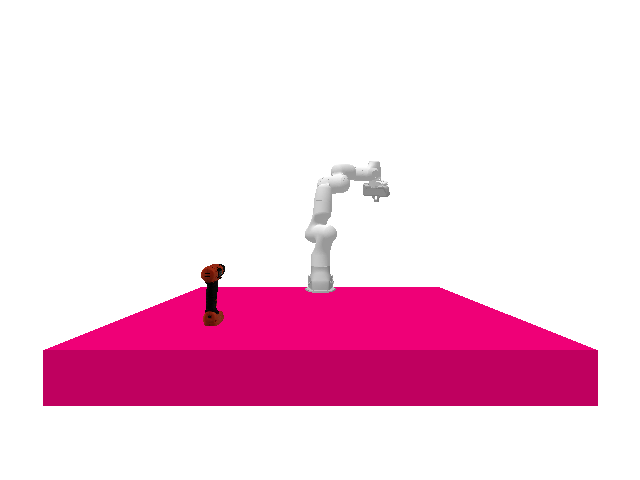

In this example we assume you are running a simulation that has the same set up seen below:

Setting up the Environment

Add a file called franka_env.py in the scripts folder.

This file defines a custom environment FrankaEnv that integrates with the Ark=robotics framework. It is designed to control and observe a Franka Emika Panda robot interacting with a ball object, either in simulation or the real world.

📦 File Structure

from ark.env.ark_env import ArkEnv

from arktypes import joint_group_command_t, joint_state_t, rigid_body_state_t

from arktypes.utils import pack, unpac- ArkEnv: Base class providing simulation and communication utilities.

- arktypes: Message types used in action and observation channels.

- pack/unpack: Helpers for serializing/deserializing messages to/from LCM.

🧠 Class Overview: FrankaEnv

class FrankaEnv(ArkEnv):This class wraps around ArkEnv and defines an environment tailored for the Franka robot. It is compliant with standard RL environment conventions.

Key Elements:

In this case we will define our action and observation channels as below

- Action Channel:

Franka/joint_group_command/sim→joint_group_command_tSends desired joint positions.

- Observation Channels:

Franka/joint_states/sim→joint_state_tReceives joint angles from Franka.

ball/ground_truth/sim→rigid_body_state_tReceives position and orientation of the ball.

🔧 Constructor

def __init__(self, config=None, sim=True)- Initializes the environment.

config: path or dict to the system configuration YAML.

sim: whether to run in simulation or real-world mode.

environment_name = "Franka_Enviroment"

- Sets the internal name of the environment (used for naming the Envrioment and Gym node).

action_space = {

'Franka/joint_group_command/sim': joint_group_command_t,

}- Defines the action channel and its Ark message type.

- Sends joint commands to Franka.

observation_space = {

'Franka/joint_states/sim': joint_state_t,

'ball/ground_truth/sim': rigid_body_state_t,

}- Defines two observation channels:

Franka/joint_states/sim: receives robot joint angles.

ball/ground_truth/sim: receives the pose of a ball in the scene.

super().__init__(

environment_name=environment_name,

action_channels=action_space,

observation_channels=observation_space,

global_config=config,

sim=sim

)- Calls the parent

ArkEnvconstructor, which:- Loads the config,

- Sets up communication,

- Creates action and observation spaces

🎮 Action Packing

python

CopyEdit

def action_packing(self, action):

- Converts a high-level action (list of joint angles) into a packed message to be sent over Ark Channels.

- The input to these functions is what ever you pass into the step function when you call it from the gym.

return {

'Franka/joint_group_command/sim': pack.joint_group_command(

name="all",

cmd=action,

)

}- Uses

joint_group_commandhelper to create a packed message.

- This function should return a dictionary where the keys should match the action channel name, and the value is the message.

👁️ Observation Unpacking

def observation_unpacking(self, observation):

- This function will return a dictionary with the keys being the observation channels and the values being the latest observations on these channels.

ball_position = unpack.unpack_rigid_body_state(

observation['ball/ground_truth/sim']

)- Decodes the position and orientation of the ball.

joint_states = unpack.unpack_joint_state(

observation['Franka/joint_states/sim']

- Decodes the joint angles from the robot.

return [ball_position, joint_states]- In this case we return a list of the two decoded observations as a list

- You can later process this into a vector or dictionary depending on use case.

🏁 Termination & Truncation Logic

def terminated_truncated_info(self, state, action, next_state):Currently we will leave this blank but is a placeholder logic to decide whether the episode should terminate or truncate, for more advanced RL/IL examples Currently returns:

(False, False, None)Can be extended based on task goals (e.g., if ball is reached or time limit exceeded).

🎯 Reward Function

def reward(self, state, action, next_state):This can also be customised to reflect task-specific rewards (e.g., distance between gripper and ball).

🔄 Environment Reset

def reset_objects(self):Called during env.reset(). Responsible for putting all objects back into their initial state.

You can call reset component function to reset the objects in the simulation.

self.reset_component("Franka")Resets the Franka robot using configuration or user-defined values.

Optional keyword arguments if resetting a robot is:

- Base Position

- Base Orientation

- Initial Configuration

If left blank it will default to the values given in the config file.

self.reset_component("ball", base_position=[-0.5, 1, 0.5])Likewise we can reset the ball to any position of our choosing.

Optional keyword arguments if resetting an object is:

- Base Position

- Base Orientation

If left blank it will default to the values given in the config file.

✅ Summary Table

| Function | What it Does |

__init__ | Sets up the FrankaEnv with channels and configuration |

action_packing | Packs joint commands into LCM messages |

observation_unpacking | Decodes robot and object state from LCM messages |

terminated_truncated_info | Defines episode end conditions (currently returns False) |

reward | Computes scalar reward (currently always 0.0) |

reset_objects | Sends reset services to robot and ball |

Code can be found:

Next Steps